Chapter 1: Introduction

Welcome to the User Manual for Replay Test Engine, your regression and verification testing tool designed to enhance the quality and reliability of developments and configuration changes within the SAP software ecosystem. This manual is intended for SAP developers, Functional Consultants, QA testers, and Key Users involved in testing SAP program modifications or verifying the impact of configuration adjustments.

The dynamic nature of SAP systems, with frequent enhancements, bug fixes, customizations, and configuration updates, carries an inherent risk: changes intended to improve one area can inadvertently impact other, seemingly unrelated functionalities. Ensuring that modifications deliver their desired benefits without introducing new errors or regressing existing features is paramount for maintaining system stability, user trust, and business continuity. RTE directly addresses this critical challenge by providing a structured, efficient, and powerful framework for conducting comprehensive regression testing of your SAP programs and verification of configuration outcomes.

1.1 Purpose of RTE

The primary purpose of RTE is to empower all teams to verify that modifications made to SAP programs, or changes to system configuration, have not negatively impacted their output for specific, well-defined scenarios. It achieves this by allowing you to:

- Capture a Baseline: Precisely record the state of key program variables before any code or configuration changes are applied. This "reference run" acts as your trusted baseline, representing the correct and expected behaviour.

- Execute and Compare: After program modifications or configuration updates, re-execute the program and use RTE's robust comparison engine to automatically identify any deviations from the established reference.

- Analyse and Adapt: Investigate identified differences with detailed insights and, when necessary, utilize advanced data mapping capabilities to handle intentional structural changes or compare against different programs.

By systematically comparing "before" and "after" states, RTE helps you catch regressions early in the cycle, reducing the cost and effort associated with fixing issues later.

1.2 The RTE Workflow

At its heart, RTE operates on the principle of structured comparison, integrated directly into your development, configuration, and testing lifecycle. The typical workflow involves several key stages:

- Instrumentation: Identify the critical internal variables within your SAP program (custom or standard) whose state you need to monitor. This is achieved

with minimal code intrusion, typically by adding a single line of ABAP code using static methods from the

ZCL_RTEclass (ZCL_RTE=>EXPORT_DATA). This can often be done via a simple enhancement, requiring only very basic ABAP knowledge. You can also optionally use methods likeZCL_RTE=>IN_RTE()for test-specific logic orZCL_RTE=>COMBINE_DATA()to enrich data before export. - Creating Reference Runs: Access the central RTE transaction (

ZRTE_START) and use the "Run program" function. Execute your instrumented program before applying any changes (or with a known 'good' configuration), using specific program variants to ensure consistent results. Mark these initial executions as "reference runs". These runs encapsulate the expected, correct output for those scenarios. - Implementing Program or Configuration Changes: Proceed with your development activities or configuration adjustments.

-

Performing Comparisons: Return to

ZRTE_STARTand use the "Compare runs" function. RTE offers several modes:- Re-run the modified program for a specific variant and compare it against its reference.

- Re-run for all reference variants and compare each against its baseline.

- Compare any two arbitrary historical runs.

- Analysing Results: RTE will highlight any discrepancies in output (raw data differences, structural changes, or detailed content variations if

using

iDatacomparison). If intentional structural changes were made to your data, or if you're performing a cross-check against a different program, RTE's advanced "iData parameters" allow for sophisticated data mapping (renaming fields, changing types, filtering rows, etc.) to enable meaningful comparison. - Managing and Approving Runs: Use "Manage runs" to view or delete old test data. Crucially, after verifying that the output of a modified program or new configuration is correct, you can "Approve" the new runs within the comparison tool, promoting them to become the new reference baseline for future tests.

1.3 About This Manual

This manual guides you through all features of RTE. It assumes basic SAP navigation skills. While Chapter 2 discusses code, instrumenting standard programs for configuration checks is designed to be accessible even with minimal ABAP exposure. Key Users can often leverage RTE for programs already instrumented by developers or consultants.

Important Information

This box draws your attention to crucial details, prerequisites, or concepts that are essential for a comprehensive understanding or the successful execution of the procedures described. Careful review of this information is highly recommended.

Best Practice

The "Best Practice" box offers guidance and recommendations for optimal usage, efficiency, or adherence to established standards. Following these suggestions can lead to improved outcomes, more robust implementations, or a more streamlined workflow.

Warning

A "Warning" box serves to alert you to potential risks, common pitfalls, or actions that could result in errors, data loss, system instability, or other undesirable consequences. It is critical to heed these notices and proceed with caution to avoid potential issues.

Chapter 2: Defining Test Data

To enable RTE to perform comparisons, you first need to instruct it which data within an SAP program (custom or standard) should be captured during a test run. This is achieved by adding a

small amount of code directly into the program you intend to test. The integration is designed to be straightforward, primarily using static methods

from the global class ZCL_RTE, which means you can call them easily without needing to declare helper variables.

2.1 Exporting Data for Comparison

The core mechanism for identifying test data is the EXPORT_DATA method. By calling this method, you specify an internal variable (like an internal table or a structure) whose

content RTE should save when the program is executed via the RTE tool.

The simplest way to use this is with a single line of code:

ZCL_RTE=>EXPORT_DATA( iv_var_name = 'LT_OUTPUT_TAB' i_data = <lt_tab> ).

Let's break down the parameters:

-

IV_VAR_NAME: (Input, TypeCHAR30) This is the logical name you assign to the data being exported. This name will be displayed within the RTE tool during comparison setup and results viewing. Important: This name does not have to match the actual ABAP variable name in your program. Choose a descriptive name that makes sense in the context of your test (e.g., 'FINAL_OUTPUT_TABLE', 'HEADER_DATA').- Important: The logical name provided in the

IV_VAR_NAMEparameter must adhere to standard ABAP variable naming rules. It should primarily consist of letters and numbers. The following special characters are also permitted: underscore (_), exclamation mark (!), percentage sign (%), dollar sign ($), ampersand (&), and asterisk (*). Avoid other special characters or spaces. -

Warning: When multiple

EXPORT_DATAcalls are made within a single program execution (one test run), ensure that theIV_VAR_NAMEused for each distinct data element is unique. If you assign the same logical name (IV_VAR_NAME) to two or more different actual variables (I_DATA) within the same run, RTE will not raise an explicit error. However, the data associated with that name within the run record will likely be overwritten or combined unpredictably, leading to corrupted or meaningless results during comparison. If exporting the same variable multiple times (e.g., in a loop to see its state at different points), RTE uses theZRTE_UNIQNfield (see section 2.3) to differentiate these snapshots.

- Important: The logical name provided in the

-

I_DATA: (Input, TypeANY) This is the actual data object (internal table, structure, elementary variable) from your program that you want RTE to capture and save for comparison. Pass the variable itself here.- Warning: The

ZCL_RTE=>EXPORT_DATAmethod is designed to capture standard data types. It fully supports elementary variables, flat structures and internal tables. Complex objects (instances of classes), data references, or nested internal tables (tables containing other tables directly as line items) are not supported for export viaI_DATA.

- Warning: The

-

IV_CPROG: (Optional Input, TypeSYST-CPROG, DefaultSY-CPROG) This parameter allows you to explicitly specify the program name under which the data should be stored in RTE. This is typically only necessary in specific scenarios, such as when testing a program that is submitted viaSUBMIT REPORTby another wrapper program. In most cases, you can omit this parameter, and RTE will automatically determine the correct program name.

Example Placement:

You should place the EXPORT_DATA call at a point in your program's logic where the variable you want to test contains the final data relevant for your comparison. Often, this is

near the end of the program or subroutine, after all processing and data preparation for that variable is complete.

Consider this typical example where data is exported just after being displayed using ALV:

" ... preceding program logic ...

lo_alv_table->display( ).

ZCL_RTE=>EXPORT_DATA( iv_var_name = 'FINAL_ALV_DATA' i_data = gt_data ).

ENDFORM.

2.1.1 Instrumenting Standard SAP Programs

RTE can also be used to verify the impact of configuration changes. You can instrument standard SAP programs by adding an EXPORT_DATA call using SAP's

Enhancement Framework. This is often a very simple process requiring minimal ABAP knowledge, making it accessible to Functional Consultants.

-

How it Works:

- Identify a standard SAP program or function module whose output is affected by the configuration you are changing.

- Find a suitable enhancement point/spot (often at the end of a subroutine or function module, after the relevant data has been processed).

- Create a simple source code enhancement implementation.

- Add a single

ZCL_RTE=>EXPORT_DATA(...)line to export the key internal table or structure that reflects the configuration's outcome.

- Benefit: This allows you to capture the "before" state of a standard process, make your configuration changes, then capture the "after" state and use RTE to precisely identify what changed, ensuring the configuration works as intended and hasn't had unforeseen side effects.

2.2 Manipulating Data for Testing

Sometimes, the raw data in your program variables might not be in the ideal format for comparison, or you might want to include additional context only during test runs. RTE provides helper methods to handle these situations without affecting the standard execution flow of your program.

2.2.1 Conditional Logic

To execute specific data preparation steps only when the program is being run as part of an RTE test, you can use the IN_RTE method.

- Method:

ZCL_RTE=>IN_RTE( ) - Parameters: None

- Returns:

abap_bool(abap_trueif running within RTE,abap_falseotherwise).

You can wrap your test-specific data manipulation logic within an IF ZCL_RTE=>IN_RTE( ) EQ abap_true...ENDIF. block. This ensures that this code is completely bypassed during normal

program operation.

2.2.2 Merging Data

A common requirement is to combine data from different sources before exporting it for comparison. For instance, you might want to add a specific field as an

extra column to every row of an internal table being tested. The COMBINE_DATA method facilitates this. Note: This method supports adding fields from a structure or

elementary variable to a base table or structure; combining two tables is not supported.

- Method:

ZCL_RTE=>COMBINE_DATA( ... ) -

Parameters:

-

I_A_TAB_OR_STR: (Input, TypeANY) The base internal table or structure to which you want to add data. -

IT_A_FIELDS: (Optional Input, Type Range Table for Field Names) A range table specifying which fields from "I_A_TAB_OR_STR" should be included in the output. If omitted, all fields are used. -

I_B_STR_OR_ELEM: (Input, TypeANY) The structure or elementary variable containing the data you want to add. -

IT_B_FIELDS: (Optional Input, Type Range Table for Field Names) A range table specifying which fields from "I_B_STR_OR_ELEM" should be added to the output. Use this to select specific fields if "I_B_STR_OR_ELEM" is a structure. -

E_TAB: (Output, TypeREF TO DATA) A data reference that will point to the newly created internal table containing the combined data.

-

Example: Combining a Table with a System Field for Testing

Let's look at how to use IN_RTE and COMBINE_DATA together. Imagine you want to test the contents of table gt_usr, but for testing purposes, you also want each

row to include the System ID (sy-sysid).

IF ZCL_RTE=>IN_RTE( ) EQ abap_true.

ZCL_RTE=>COMBINE_DATA(

EXPORTING

i_a_tab_or_str = gt_usr " Base table or strucure

* it_a_fields = " Fields to be used in output

i_b_str_or_elem = sy " Structure or elemtary var to be added

" Fields to be used in output

it_b_fields = VALUE #( ( sign = 'I' option = 'EQ' low = 'SYSID' ) )

IMPORTING

e_tab = DATA(go_ref) " Combined table

).

FIELD-SYMBOLS: <gt_tab> TYPE ANY TABLE.

ASSIGN go_ref->* TO <gt_tab>.

ZCL_RTE=>EXPORT_DATA( iv_var_name = 'GT_TAB_WITH_SYSID' i_data = <gt_tab> ). " Ensure unique IV_VAR_NAME

ENDIF.

Explanation of the Example:

- Check Execution Mode:

IF ZCL_RTE=>IN_RTE( ) EQ abap_true.ensures the combination logic runs only during RTE tests. -

Combine Data:

ZCL_RTE=>COMBINE_DATAis called:-

gt_usris the base table. -

systructure provides the additional data. -

it_b_fieldsspecifically selects only theSYSIDfield from thesystructure to be added. - The result (a new table combining

gt_usrfields andsy-sysid) is created, andgo_refpoints to it.

-

- Export:

ZCL_RTE=>EXPORT_DATAis called using the field symbol. It exports the combined data during an RTE run under a distinct logical name.

By using these methods, you can precisely define and even adapt the data captured by RTE for effective regression testing, without impacting the program's behaviour for end-users.

2.2.3 Real-World Example

While the COMBINE_DATA method can be used for various data manipulations, a common and powerful use case is adding contextual information to a primary data table before exporting it

for testing. This makes test results much easier to analyze, especially when dealing with data processed in loops or across different entities (like employees, materials, documents, etc.). This

technique is also valuable for configuration verification, for instance, to check payroll results before and after a configuration change with an effective date.

Let's consider a scenario from SAP Payroll. Within the standard payroll driver, the EXPRT function processes the Results Table (RT) for each employee

(PERNR) and payroll period (APER). For regression testing or configuration verification, simply exporting the RT table might not be sufficient, as you

wouldn't immediately know which employee or period a specific RT entry belongs to when looking at the combined test results later.

The following code snippet, intended to be placed within the relevant part of the payroll logic (like function EXPRT, potentially via an enhancement for standard code),

demonstrates how to use ZCL_RTE=>COMBINE_DATA twice to add both the employee number and the payroll period information as columns to the RT table, specifically for RTE

test runs:

FIELD-SYMBOLS: <lt_tab> TYPE ANY TABLE,

<lt_tab2> TYPE ANY TABLE.

IF ZCL_RTE=>IN_RTE( ) EQ abap_true.

" Step 1: Combine RT table with the Personnel Number (PERNR)

ZCL_RTE=>COMBINE_DATA(

EXPORTING

i_a_tab_or_str = rt[] " Base table is RT

i_b_str_or_elem = pernr " Add data from PERNR structure

" Select only the 'PERNR' field from the PERNR structure

it_b_fields = VALUE #( ( low = 'PERNR' sign = 'I' option = 'EQ' ) )

IMPORTING

e_tab = DATA(l_out_data) " Output: RT fields + PERNR field

).

ASSIGN l_out_data->* TO <lt_tab>. " Assign intermediate result

" Step 2: Combine the intermediate table with the Payroll Period (APER)

ZCL_RTE=>COMBINE_DATA(

EXPORTING

i_a_tab_or_str = <lt_tab> " Base table is the result from Step 1

i_b_str_or_elem = aper " Add data from APER structure

" IT_B_FIELDS is omitted, so add ALL fields from APER structure

IMPORTING

e_tab = DATA(l_out_data2) " Output: RT fields + PERNR field + APER fields

).

ASSIGN l_out_data2->* TO <lt_tab>. " Assign final result

" Step 3: Export the final combined table

ZCL_RTE=>EXPORT_DATA(

EXPORTING

iv_var_name = 'RT_DATA' " Logical name for the exported data

i_data = <lt_tab2> " The table containing RT + PERNR + APER

).

ENDIF.

Explanation:

-

IF ZCL_RTE=>IN_RTE( )...ENDIF.: This ensures the entire data combination logic executes only when the program is run via RTE, having no impact on regular payroll runs. -

First

COMBINE_DATACall :- Takes the current

RTinternal table (i_a_tab_or_str = rt[]). - Adds data from the

PERNRstructure (i_b_str_or_elem = pernr). - Crucially,

it_b_fieldsspecifies that only the field named 'PERNR' from thepernrstructure should be added as a new column to each row of theRTtable. - The result is a new table (referenced by

l_out_data, assigned to<lt_tab>) containing all originalRTfields plus aPERNRfield.

- Takes the current

-

Second

COMBINE_DATACall :- Takes the intermediate table

<lt_tab>(which already contains RT + PERNR) as the base (i_a_tab_or_str = <lt_tab>). - Adds data from the

APERstructure (i_b_str_or_elem = aper). - Since

it_b_fieldsis not provided this time, all fields from theaperstructure are added as new columns to the table. - The result is the final table (referenced by

l_out_data2, assigned to<lt_tab2>) containing originalRTfields, thePERNRfield, and all fields from theAPERstructure.

- Takes the intermediate table

-

EXPORT_DATACall : The final, enriched table<lt_tab2>is exported under the logical name 'RT_DATA'.

Outcome and Applicability:

By performing these combinations before the export, the 'RT_DATA' variable captured by RTE will contain not just the payroll results but also the associated employee number and period details directly within each row. This makes analyzing differences during comparison significantly easier, as the context is immediately apparent.

Universal Technique: While this specific example uses variables common in SAP Payroll (RT, PERNR, APER), the underlying technique is

universally applicable. You can use ZCL_RTE=>IN_RTE and ZCL_RTE=>COMBINE_DATA in any SAP module to enrich your primary test data with relevant

contextual information (like document numbers, material codes, company codes, dates, etc.) before exporting it with ZCL_RTE=>EXPORT_DATA, thereby enhancing the clarity and

usefulness of your regression tests or configuration verifications.

2.3 Exporting Different Variable Types

Let's examine an example demonstrating how to export variables of different types (elementary variable, structure, and internal table) using ZCL_RTE=>EXPORT_DATA. This

example also illustrates how RTE handles situations where the same logical variable name (IV_VAR_NAME) is exported multiple times within a single program execution, such as within a

loop.

Consider the following ABAP code snippet:

DATA: lv_var TYPE string,

ls_str TYPE t000,

lt_tab TYPE TABLE OF usr02.

lv_var = 'Single variable'.

SELECT SINGLE * FROM t000 INTO @ls_str WHERE mandt = '000'.

SELECT * FROM usr02 INTO TABLE @lt_tab.

DO 3 TIMES.

" Modify variables slightly in each loop iteration

lv_var = lv_var && '__' && sy-index.

ls_str-mtext = ls_str-mtext && '__' && sy-index.

READ TABLE lt_tab ASSIGNING FIELD-SYMBOL(<ls_tab>) INDEX 1.

IF sy-subrc EQ 0.

<ls_tab>-accnt = sy-index. " Change only one field in the first row of the table

ENDIF.

" Export all three variables in each iteration

ZCL_RTE=>EXPORT_DATA( iv_var_name = 'LV_VAR' i_data = lv_var ).

ZCL_RTE=>EXPORT_DATA( iv_var_name = 'LS_STR' i_data = ls_str ).

ZCL_RTE=>EXPORT_DATA( iv_var_name = 'LT_TAB' i_data = lt_tab ).

ENDDO.

In this code:

- We declare a string (

lv_var), a structure (ls_strbased onT000), and an internal table (lt_tabbased onUSR02). - We initialize these variables with some data.

- We loop three times (

DO 3 TIMES.). - Inside the loop, we slightly modify the content of each variable in each iteration.

- Crucially, within each loop iteration, we call

ZCL_RTE=>EXPORT_DATAfor all three variables, using the sameIV_VAR_NAME("LV_VAR", "LS_STR", "LT_TAB") respectively across iterations.

Viewing the Exported Data in RTE:

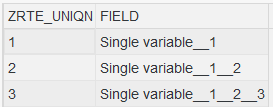

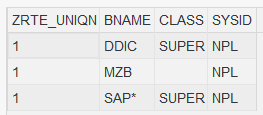

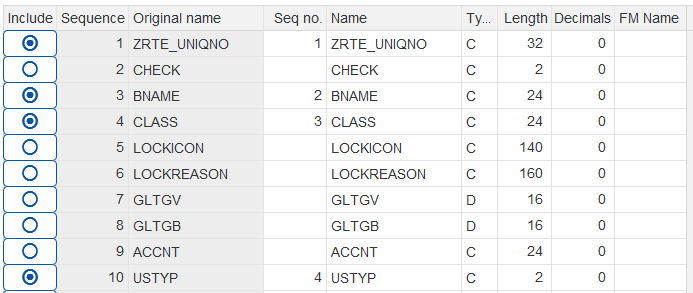

After executing this code via the "Run program" function in RTE, if you inspect the captured data for this run (as described in Chapter 4.4), you will observe the following:

-

Elementary Variable (

LV_VAR):

- Since

LV_VARis an elementary variable (string), its value is displayed in a single column with the generic headerFIELD. - Notice the

ZRTE_UNIQNcolumn. This field acts as a unique identifier for each distinct call toZCL_RTE=>EXPORT_DATAwithin the run for the sameIV_VAR_NAME. BecauseEXPORT_DATAfor "LV_VAR" was called three times (once per loop iteration), you see three rows, each with a differentZRTE_UNIQNvalue (1, 2, 3), reflecting the state oflv_varat the moment of each export.

- Since

-

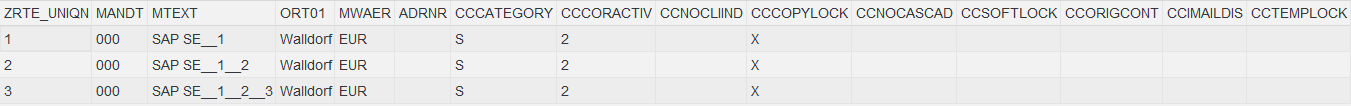

Structure (

LS_STR):

- For the structure

LS_STR, RTE displays the data using column headers that directly correspond to the field names of theT000structure (e.g.,MANDT,MTEXT,ORT01, etc.). - Similar to the elementary variable, the

ZRTE_UNIQNcolumn appears, again having distinct values (1, 2, 3) for each of the three timesEXPORT_DATAwas called for 'LS_STR', showing the state of the structure in each loop pass.

- For the structure

-

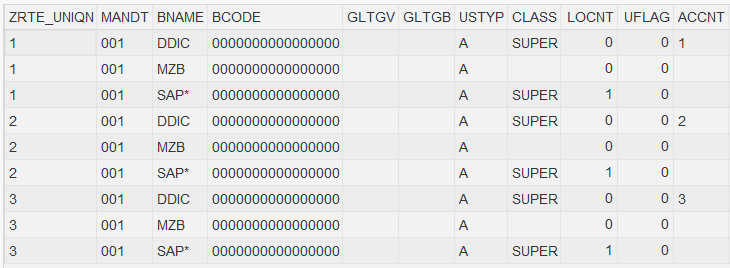

Internal Table (

LT_TAB):

- When viewing the internal table

LT_TAB, the column headers correspond to the fields of the table's line type (USR02, e.g.,MANDT,BNAME,ACCNT, etc.). - Here, the role of

ZRTE_UNIQNbecomes particularly clear. The internal table (lt_tab) contains multiple rows itself. RTE exports the entire table contents each timeZCL_RTE=>EXPORT_DATAis called for 'LT_TAB'. - Therefore, you will see multiple groups of rows in the display, each group corresponding to a single export call. The

ZRTE_UNIQNvalue will be the same for all rows belonging to a single export call (one snapshot of the table), but it will differ between the export calls made in different loop iterations. In this example, all table rows exported during the first loop pass will haveZRTE_UNIQN = 1, all rows from the second pass will haveZRTE_UNIQN = 2, and all from the third pass will haveZRTE_UNIQN = 3. This allows you to distinguish the complete state of the table as it was captured at each specific export moment. You can also see the effect of the modification within the loop: theACCNTfield for the first user (DDICin the screenshot) changes value (1, 2, 3) corresponding to theZRTE_UNIQNidentifier for that export set.

- When viewing the internal table

This example highlights how RTE handles different data types and preserves the state of variables even when exported multiple times under the same logical name within a single execution, using

the ZRTE_UNIQN field to differentiate between these distinct export snapshots.

2.4 Data Storage

Internally, when ZCL_RTE=>EXPORT_DATA is called, the content of the provided variable (I_DATA) is serialized into a raw data format. This serialized representation

is then saved persistently, within dedicated database tables managed by the RTE tool. For complex types like internal tables or structures, this process typically involves converting each row of

the variable into its raw data equivalent, and this will result in each field or cell of the source variable being stored as a distinct record or part of a record in the RTE backend tables, along

with metadata such as the run identifier, logical variable name, and export sequence (ZRTE_UNIQN).

Important Data Privacy (GDPR) Considerations:

It is crucial to understand that if the variables exported by ZCL_RTE=>EXPORT_DATA during a test run contain any Personal Data (as defined by GDPR or other

applicable data privacy regulations, such as names, addresses, identification numbers, sensitive personal information, etc.), this personal data will be copied and stored

redundantly within the RTE tool's backend database tables.

- User Responsibility: The responsibility for managing this captured data in accordance with GDPR, other relevant data privacy laws, and any internal company data protection policies lies solely with the user of the RTE tool and the organization implementing it. This includes considerations for data retention, access control, and deletion of personal data when it is no longer required for testing purposes.

- System Context: While RTE can be used in any SAP system, it is important to note that regression testing and configuration verification are most commonly performed in development (DEV) and quality assurance (QAS) systems. In many organizations, these non-production systems already utilize anonymized or pseudonymized data as a best practice for data protection. If your test systems contain such scrambled or non-personal data, the GDPR implications of using RTE are significantly reduced.

- Production Systems: If RTE is used in a production system or a system containing live personal data, extreme caution and strict adherence to data privacy protocols are essential. Ensure you have the necessary authorizations and a clear understanding of the data being captured and its lifecycle within RTE.

- Data Management in RTE: Use the "Manage runs" functionality (detailed in Chapter 6) to periodically review and delete old test runs, which will also remove the associated data from RTE's tables. This is a key mechanism for managing data retention within the tool.

Always be mindful of the nature of the data you are exporting with RTE and ensure your usage aligns with all applicable data privacy requirements and organizational policies.

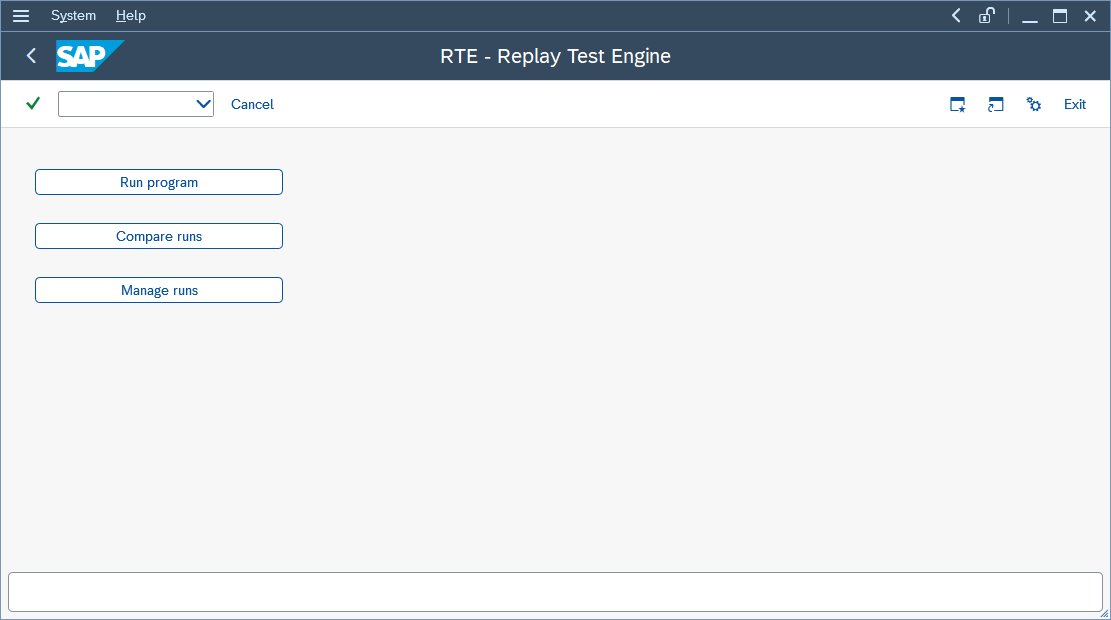

Chapter 3: The Central RTE Transaction

Once you have instrumented your program(s) by adding the necessary ZCL_RTE=>EXPORT_DATA calls (as described in Chapter 2),

the next step is to interact with the RTE tool itself

to create runs and perform comparisons. The primary access point for all RTE functionalities is the central transaction ZRTE_START.

3.1 Accessing the RTE Main Screen

To begin using the RTE tool's interactive features, navigate to the SAP transaction ZRTE_START. You will be presented with the main screen, titled "RTE - Replay Test Engine",

which serves as the central hub for managing your regression testing and configuration verification activities.

3.2 Overview of Main Functions

The ZRTE_START transaction provides direct access to the core components of the RTE solution through three main options:

- Run program: This function is used to execute your instrumented SAP program (custom or standard) under specific conditions (usually with a predefined variant) and capture

the data you marked for export using

ZCL_RTE=>EXPORT_DATA. Each execution creates a "run" record within RTE, storing the captured data and associated context (like program name, variant, timestamp, user, and an optional description). These runs form the basis for later comparisons. You will typically use this to create your reference runs before making changes (code or configuration) and subsequent runs after making changes. - Compare runs: This is the heart of the RTE tool. This function provides a powerful interface for comparing the data captured in different runs. You can compare a recent run against a reference run of the same program, compare two arbitrary runs, or even compare a run against the output of a different program (cross-program testing). This section offers various comparison modes and advanced data mapping capabilities to pinpoint differences effectively.

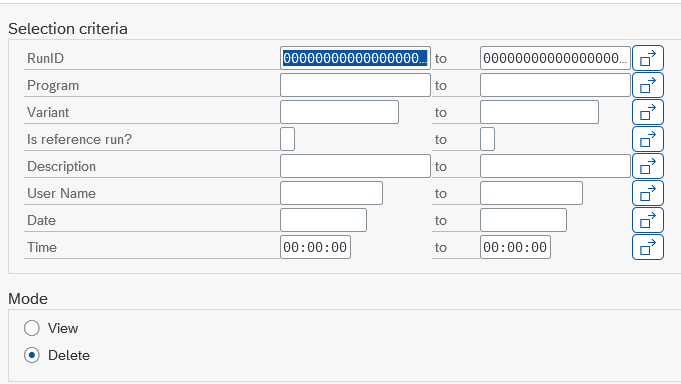

- Manage runs: This utility allows you to view, search for, and maintain the test runs that have been previously created. You can search for runs based on various criteria (like program name, variant, user, date, or description), preview the data captured within a specific run, and delete runs that are no longer needed.

3.3 Navigating This Manual

The subsequent chapters of this manual will delve into the detailed usage of each of these core functions ("Run program", "Compare runs", and "Manage runs"), explaining their features, options, and workflows step-by-step. You will typically start by creating runs using "Run program", and then analyze the results using "Compare runs".

Chapter 4: Creating Test Runs

The first step in the practical application of RTE is typically to create one or more "runs" of the program you intend to test. A run represents a single execution via RTE, during which the

tool captures the data you designated using the ZCL_RTE=>EXPORT_DATA method. These saved runs are the essential building blocks for later comparisons.

To create a run, select the "Run program" option from the main ZRTE_START transaction screen (described in Chapter 3).

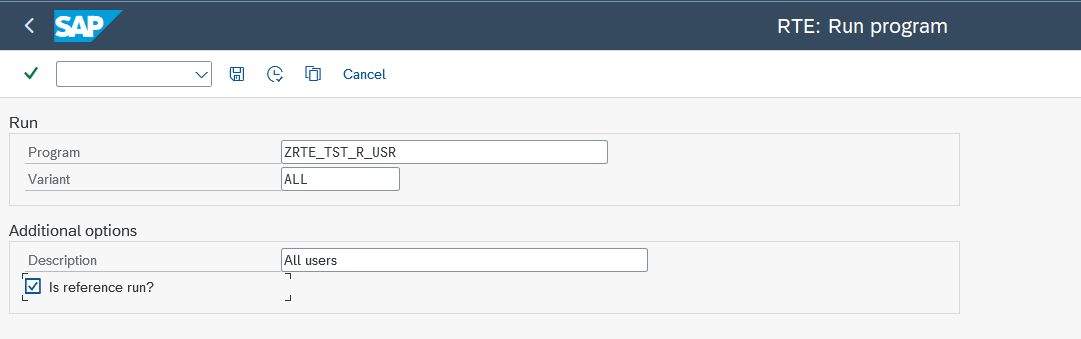

This will navigate you to the "RTE: Run program" screen.

4.1 Specifying Run Parameters

On the "RTE: Run program" screen, you need to provide details about the execution you want to perform:

- Program: Enter the technical name of the SAP report (program) you wish to execute (e.g.,

ZRTE_TST_R_USR). Important: This should be the report name, not the transaction code that might normally be used to start it. -

Variant: Specify the execution variant you want to use for this run.

- It is highly recommended to use a saved variant. Using variants ensures that the program runs with the exact same selection criteria and parameters each time, which is crucial for achieving repeatable and reliable test results for both code and configuration checks.

- Real-World Scenarios & Coverage: Variants used for testing should reflect real-life scenarios as closely as possible, utilizing anonymized production data on development and quality systems if only possible. The more variants (and thus reference runs) created, the more comprehensive the code coverage. As you prepare more tests and variants, you increase the overall test coverage.

- Skipping Variant: If you leave the "Variant" field blank, RTE will display the program's standard selection screen when you execute the run (if one exists). You can then enter parameters manually. However, this approach makes it harder to guarantee test repeatability, as manual input can vary between runs.

- It's important to note that while using variants is highly recommended for programs with selection screens to ensure test repeatability, some SAP programs are designed without a selection screen altogether. For such programs, leaving the "Variant" field blank in the "RTE: Run program" screen is the correct and necessary procedure to execute them via RTE. In these cases, the tool will directly execute the program without attempting to display a selection screen.

- Description: (Optional) Provide a meaningful description for this run. This text will help you identify the run later when managing runs or setting up comparisons (e.g., "Baseline before performance tuning," "Run after defect fix #123," "Sales Pricing - Before ZNEW activation," "Payroll - Jan 2024 - Pre-config change").

-

Is reference run?: (Checkbox) This is a critical option.

- Check this box if this specific run represents the correct, expected output against which future runs (after code or configuration changes) should be compared. Typically, you create reference runs before making modifications.

- Uniqueness Constraint: For any given combination of Program and Variant, only one run can be marked as the reference run at any time.

4.2 Handling Existing Reference Runs

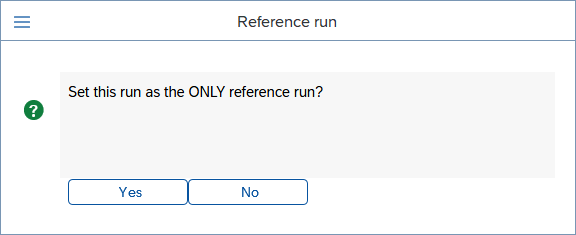

Because only one reference run is allowed per program/variant pair, if you check the "Is reference run?" box and a reference run already exists for the specified Program and Variant, RTE will prompt you for confirmation:

- Confirmation: The pop-up asks: "Set this run as the ONLY reference run?".

- If you choose "Yes", the current run you are creating will be marked as the new reference run, and the previously existing reference run for this program/variant combination will automatically have its reference flag removed.

- If you choose "No", the current run will still be created, but it will not be marked as a reference run, even though you initially checked the box on the selection screen. The existing reference run will remain unchanged.

4.3 Executing the Run

Once you have filled in the parameters, execute the run (by pressing F8 or clicking the Execute icon). RTE will launch the specified program with the selected variant (or display the

selection screen if no variant was provided). The program will execute, and the ZCL_RTE=>EXPORT_DATA calls within its code (or enhancement) will trigger RTE to capture the specified

variables.

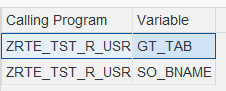

After the program execution finishes, RTE will display a summary screen showing the variables that were successfully exported during this run:

This list shows the logical variable names (Variable column) you defined using the IV_VAR_NAME parameter in your ZCL_RTE=>EXPORT_DATA calls, along with the

program they originated from.

Disclaimer: Handling Runtime Errors (Short Dumps). Please be aware that RTE executes the target program as is. If the program encounters a runtime error

(short dump) during its execution initiated by RTE, the execution will terminate, and the run will likely not be saved completely or correctly. RTE does not include mechanisms to

catch or handle these short dumps. This applies even if the runtime error is potentially caused by incorrect usage of ZCL_RTE methods (e.g., passing incompatible data types

to EXPORT_DATA or COMBINE_DATA). Ensuring the stability of the program under test, including the correct implementation of RTE method calls (whether in custom code

or enhancements), remains the responsibility of the developer initiating the run. Check transaction ST22 for dump details if this occurs.

4.4 Inspecting Captured Data

You can immediately inspect the data captured for any variable listed. Simply double-click on the row corresponding to the variable you want to view. RTE will then display the content of that variable (in read-only mode) as it was saved during the run.

This allows for a quick verification that the expected data was captured correctly.

This run, along with the captured data, is now saved within the RTE system and can be used for comparisons (see Chapter 5) or managed via the "Manage runs" function (see Chapter 6).

Chapter 5: Comparing Test Runs

Having successfully instrumented your programs (Chapter 2) and captured baseline executions as runs (Chapter 4), we now arrive at the central purpose and most powerful aspect of the RTE tool: comparing these runs. This is where RTE truly shines, allowing you to analyze the effects of your code modifications or verify the outcomes of configuration changes, ensuring that enhancements deliver the intended value without introducing unintended side effects. This chapter will guide you through the different ways to initiate comparisons, the various types of analysis RTE can perform, and data mapping features that enable meaningful comparisons even when program structures evolve.

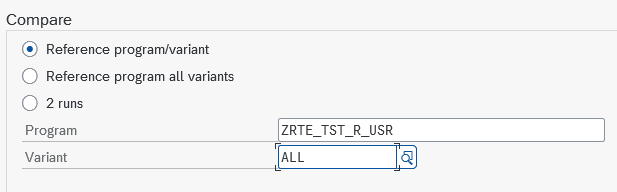

5.1 Understanding the Modes

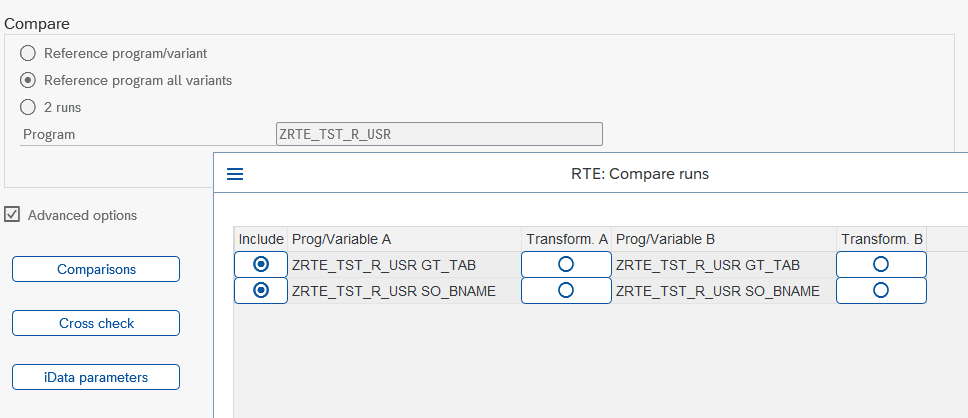

We start the comparison on the "RTE: Compare runs" screen, where you must first decide how you want RTE to select the runs for comparison. RTE offers three distinct operational modes, each suited to different testing scenarios:

-

Reference program/variant:

- Core Idea: This mode focuses on validating a single, specific test scenario after code or configuration changes. You pinpoint the exact Program and Variant you are interested in testing.

- How it Works: RTE locates the unique reference run previously saved for this specific program/variant combination. Then, crucially, it triggers a fresh execution of the current version of the specified program (with its current code and system configuration) using that same variant. Finally, it compares the data exported during this new execution against the data stored within the historical reference run.

- Prerequisite: A reference run must already exist for the chosen program and variant. If not, RTE will issue a notification, and no comparison can proceed for that specific selection. Convenient search helps are available, first for selecting the Program, and then for listing only the valid Variants associated with that program.

-

Reference program all variants:

- Core Idea: This mode offers a broader safety net, automatically testing all established reference scenarios for a given program in one go. You only need to specify the Program name.

- How it Works: RTE scans its records to find every variant associated with the entered Program name for which a reference run has been previously created. For each of these identified variants, it performs the same process as the single variant mode: it re-executes the program with that variant and compares the new results against the corresponding reference run.

- Typical Use Case: This is the ideal mode for comprehensive regression testing after program changes. It provides assurance that your modifications haven't broken functionality across any of the standard test scenarios you've established as references. It's thorough but may take longer if many reference variants exist.

-

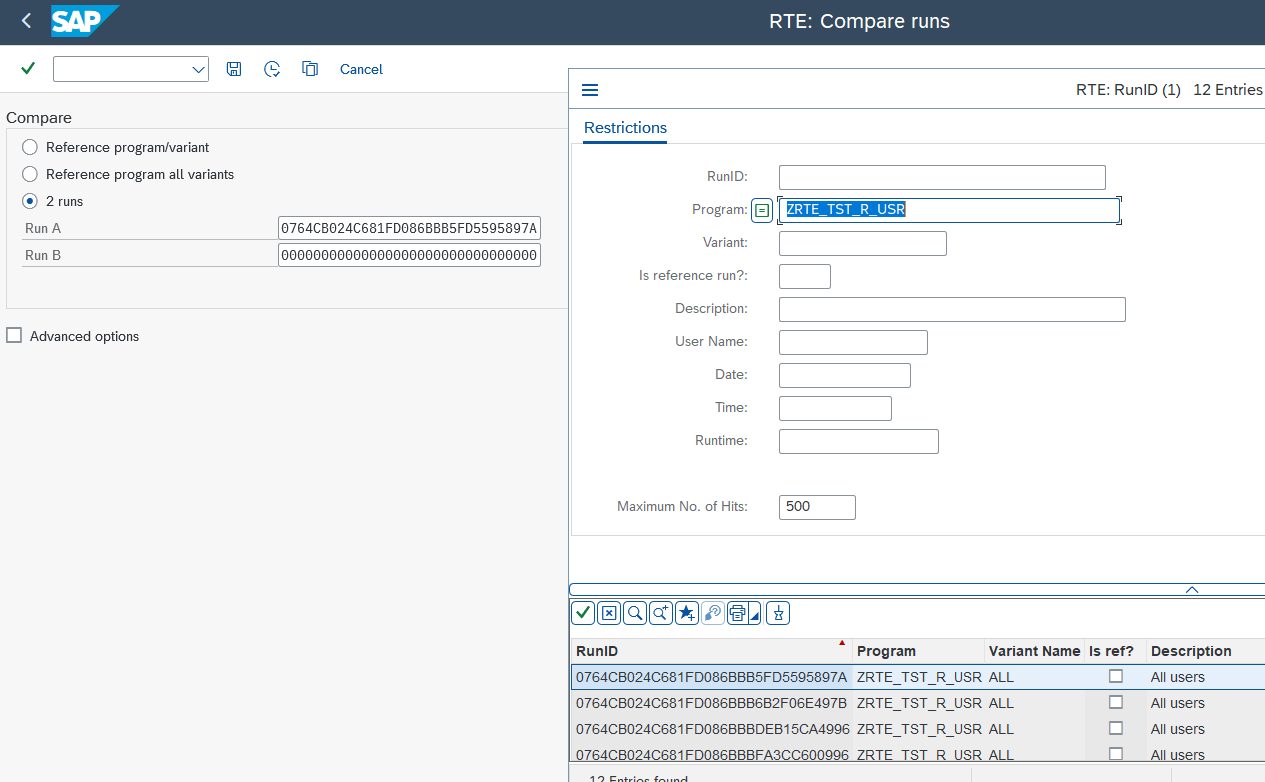

2 runs:

- Core Idea: This mode grants complete manual control, allowing you to select any two previously completed runs from the RTE history for a direct comparison.

- How it Works: You explicitly provide the unique technical identifiers (Run IDs) for the two runs you wish to compare, designated as "Run A" and "Run B". RTE then retrieves the data stored for these specific runs and performs the comparison.

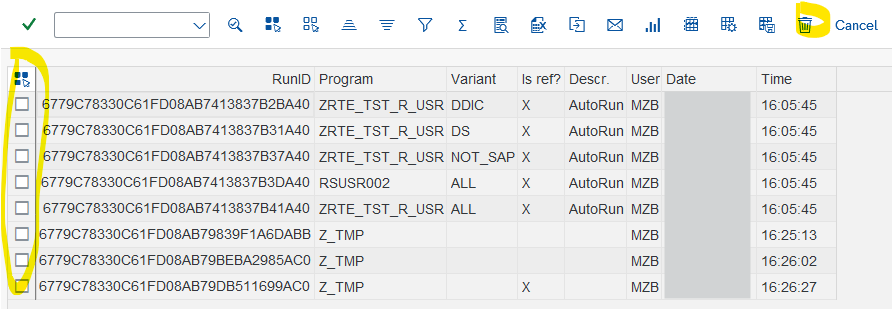

- Selecting Runs: The Run IDs themselves are long, system-generated strings and not easily remembered. Therefore, RTE provides search helps for both the "Run A" and "Run B" fields. These search helps allow you to locate the desired runs using familiar criteria such as the Program name, Variant used, the Description you provided during run creation, the User who created the run, or the Date/Time of execution.

5.2 Practical Scenarios Setup

To make the comparison features easy to follow, we'll use a concrete example throughout this chapter. We'll base our examples on the sample program ZRTE_TST_R_USR, which is

provided as part of the RTE tool package (you are encouraged to copy this program into your own Z*-namespace program to experiment alongside this manual). This program's core function is to

display user information from the standard SAP table USR02. While simple, it's perfectly sufficient to demonstrate RTE's comparison capabilities.

Initial Preparation Steps:

-

Variant Creation: Define and save three distinct variants for your

ZRTE_TST_R_USRprogram:-

ALLSelection Criteria: Leave all selection fields blank (this will select all users). -

DSSelection Criteria: Restrict the user selection to include onlyDDICandSAP*. -

DDICSelection Criteria: Restrict the user selection to include onlyDDIC.

-

- Enable RTE Data Export: Ensure that the necessary

ZCL_RTE=>EXPORT_DATAstatements are active within the source code of yourZRTE_TST_R_USRprogram. Uncomment the code block marked between<1>and</1>tags in the sample program. This action should configure the program to export the main internal table containing user data (logically namedGT_TABin theEXPORT_DATAcall) and the selection criteria used (SO_BNAME). (Refer back to Chapter 2 for a detailed explanation of addingEXPORT_DATAcalls). - Establish the Baseline - Create Reference Runs: This is a critical step. Use the "Run program" function detailed in Chapter 4. Execute

your

ZRTE_TST_R_USRprogram once for each of the three variants (ALL,DS,DDIC). During each of these initial executions, make sure to check the "Is reference run?" checkbox. This action flags these specific runs as the official baseline, the "known good" state against which future changes will be measured.

With these variants created and initial reference runs saved, we have established our testing foundation.

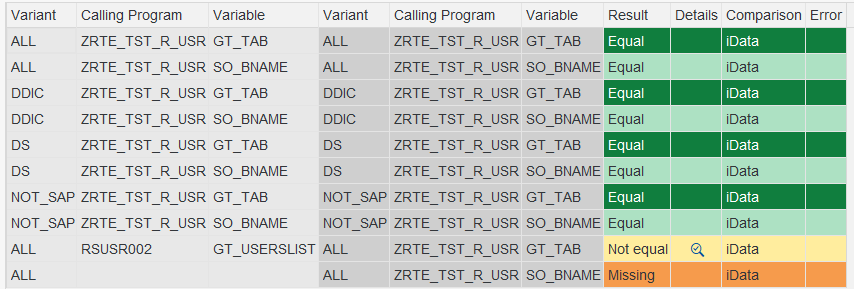

5.3 The First Comparison

Before we introduce any modifications to our test program, let's perform a comparison to confirm that RTE sees the current state as identical to the reference runs we just created. This builds confidence in the setup.

- Navigate back to the

ZRTE_STARTtransaction and choose "Compare runs". - Select the comparison mode: "Reference program all variants". This tells RTE to check all established reference points for the specified program.

- In the "Program" input field, enter the name of your test program

ZRTE_TST_R_USR. - Execute the comparison (by pressing F8 or clicking the Execute icon).

RTE will now diligently re-execute your program three times, once for each reference variant (ALL, DS, DDIC). It will then compare the data captured during

these new executions against the data stored in the corresponding reference runs created moments ago. Logically, since we haven't altered the program's code, the results should perfectly match

the references.

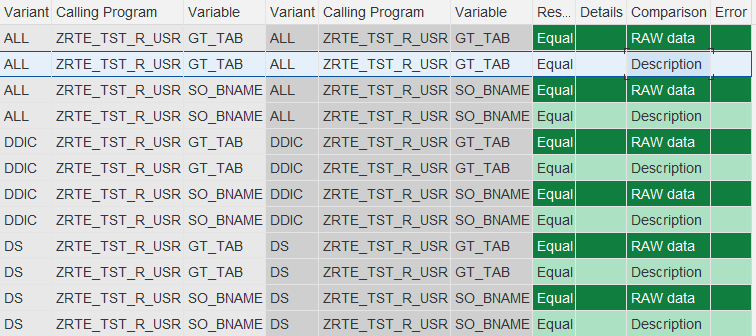

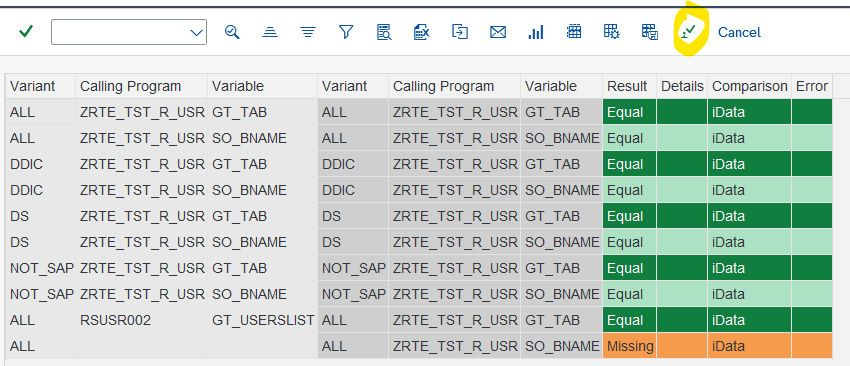

Decoding the Comparison Results Grid:

The outcome of the comparison is presented in an ALV grid.

Let's break down the columns and their significance:

-

Identifying the Comparison Pair:

-

Left Side (Reference Run Information - light grey background): These columns identify the baseline element of the comparison.

-

Variant: Shows the variant name associated with the reference run. -

Calling Program: Confirms the program name from the reference run. -

Variable: Displays the logical name (theIV_VAR_NAMEyou used inZCL_RTE=>EXPORT_DATA) of the specific data variable being compared from the reference run.

-

-

Right Side (New Run Information - darker grey background): These columns identify the run that was just executed for comparison.

-

Variant,Calling Program,Variable: Show the corresponding details for the newly generated run. - Observation: In standard "Reference program..." modes, these values should mirror the left side. A discrepancy here (e.g., a variable appearing on the left but not the right) immediately signals that the set of exported variables has changed between the reference capture and the current execution.

-

-

Left Side (Reference Run Information - light grey background): These columns identify the baseline element of the comparison.

-

Analysing the Outcome:

-

Result: This column provides the high-level verdict of the comparison for this specific variable pair and comparison type. Possible statuses include:-

Equal(Highlighted Green): Success! The comparison determined that the data (or structure, depending on the comparison type) is identical between the reference and the new run. -

Not equal(Highlighted Yellow): A difference was detected. The data or structure does not match. Further investigation is needed. -

Missing(Highlighted Orange): Indicates that the variable was found and exported in one of the runs (the reference) but was not exported in the other (the new run), or vice-versa. This often points to changes in the program logic controlling theEXPORT_DATAcall. -

Error(Highlighted Red): Signals that the comparison itself could not be successfully performed for technical reasons. TheErrorcolumn will provide more details.

-

-

Details: This column becomes active when using theiDatacomparison type. IfiDatacomparison was performed and theResultis "Not equal", a magnifying glass icon will appear here. Clicking this icon allows you to drill down and see the exact data differences (explored further in section 5.4). For other comparison types or when the result is "Equal", this column is blank and inactive. -

Comparison: Specifies which type of comparison this particular row in the grid represents. RTE can perform several types of checks configured in the advanced options:-

RAW data: This is the most basic and fastest comparison. RTE compares serialized values that were saved for the variables. It's a yes/no check – either the values are identical (Equal) or they are not (Not equal). It cannot show what changed, only that a change occurred. Useful for quick checks on large datasets where performance is key. -

Description: This comparison focuses solely on the structure of the exported variable, not the data values themselves. It checks if elements like field names, data types, field lengths, or the order of fields within an internal table have changed between the reference and the new run. AnEqualresult means the structure is unchanged;Not equalmeans the structure has been altered. -

iData: This is the most powerful and informative comparison type. RTE deserializes the saved raw data back into structured information (like internal tables) for both runs. It then performs a detailed, field-by-field and row-by-row comparison, capable of identifying specific value changes, added rows, or deleted rows. While significantly more resource-intensive and slower than RAW or Description, it's essential for understanding the nature of data differences and enables the drill-down via theDetailscolumn.Note: iData comparisons do NOT preserve the original order of records. Both tables are sorted before comparison to make it easier to identify differences, such as added or removed records in subsequent runs.

-

-

Error: If theResultcolumn shows "Error", this column will contain a textual explanation of the problem encountered during the comparison attempt. Common examples include issues with data deserialization or, frequently withiData, an inability to compare due to incompatible data structures between the two runs.

-

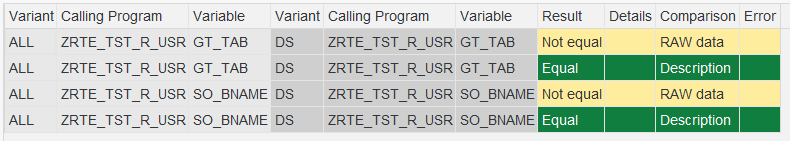

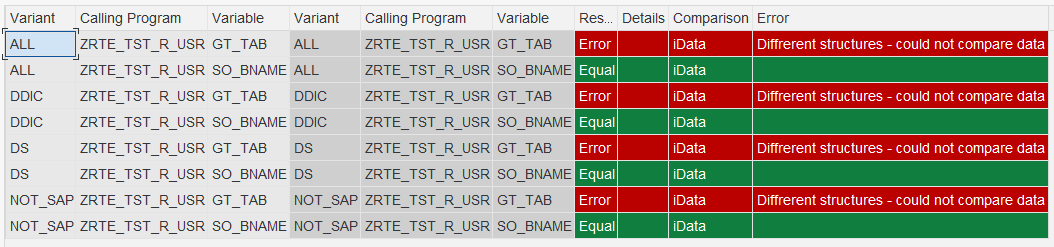

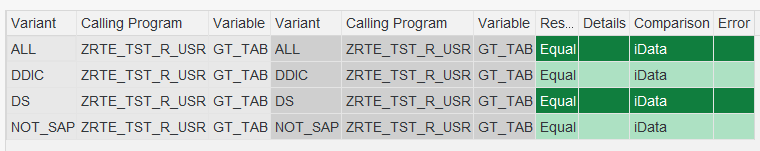

In our initial baseline check we should observe satisfying green "Equal" statuses across the board for both GT_TAB and SO_BNAME for

all three variants (ALL, DS, DDIC).

Performance notice. During RTE runs, the variables selected for testing are serialized and stored in the database. During comparisons, this data is retrieved and analyzed. For large datasets, database read/write operations can significantly impact performance. The comparison process may also be memory-intensive, as RTE needs to hold the "before" and "after" states, along with the computed differences — in total, this can require up to three times the size of the original variable state in memory.

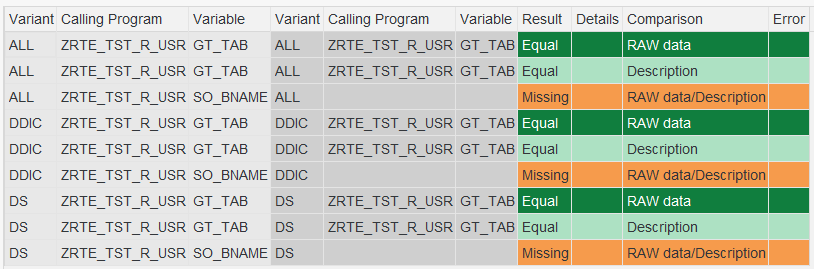

Simulating a Code Change

To see how RTE flags discrepancies, let's simulate a simple code change. Imagine we go back into the ZRTE_TST_R_USR program and comment out the specific line responsible for

exporting the selection criteria: ZCL_RTE=>EXPORT_DATA( iv_var_name = 'SO_BNAME' i_data = so_bname[] ).. After saving and activating this change, we re-run the "Reference program

all variants" comparison.

The results grid now tells a different story. For the variable SO_BNAME, the Result column will now display the orange "Missing" status. Furthermore, the right-hand side

columns (Variant, Calling Program, Variable) for the SO_BNAME rows will be empty, visually confirming that this variable was present in the reference run (left side) but

was not captured in the new execution due to our code change.

5.4 Drilling Down

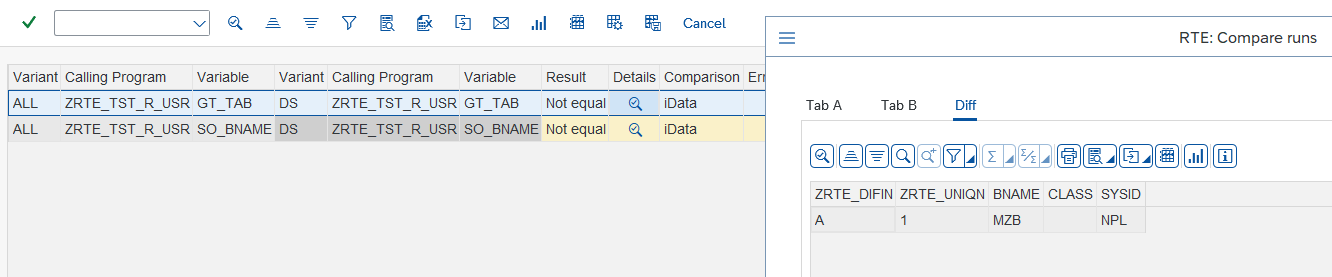

Let's set up a scenario where we know the data content differs. We'll use the "2 runs" mode to compare the reference run created using the ALL variant against the reference run

created using the DS variant. We know these contain different sets of users.

- Navigate to "Compare runs".

- Choose the mode: "2 runs".

- Utilize the search helps provided for the "Run A" and "Run B" fields. For "Run A", locate and select the Run ID corresponding to the reference run of

ZRTE_TST_R_USRexecuted with variantALL. For "Run B", select the Run ID for the reference run using variantDS. - Execute the comparison with default settings.

For the GT_TAB variable, the RAW data comparison will correctly report Result = Not

equal, but the Details column will remain inactive, offering no further insight.

Activating and Utilizing the iData Comparison:

To unlock the detailed view, we need to explicitly instruct RTE to perform the iData comparison:

- On the "Compare runs" selection area, locate and check the "Advanced options" checkbox. This reveals additional control buttons.

- Click the newly visible "Comparisons" button.

- A configuration pop-up appears, showing available comparisons. Select "iData", for clarity in this example, you might also unselect "Raw" and "Description". The "iData parameters" button (explained in section 5.5) will also become active if "iData" is selected.

- Now, re-execute the comparison from the main screen.

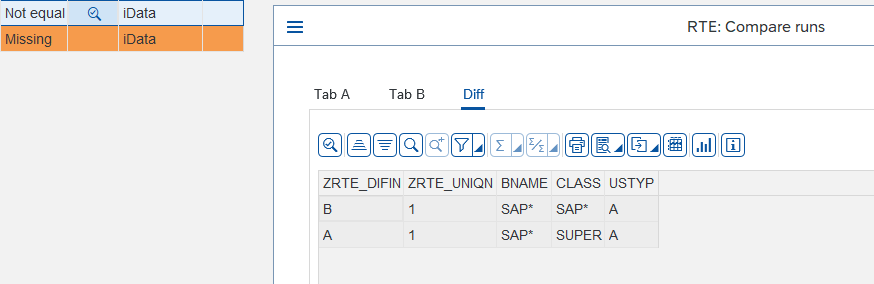

The results grid should now reflect that the iData comparison was performed for GT_TAB. The Result will still be "Not equal", but critically,

the Details column for that row will now display the magnifying glass icon.

Inspecting the Differences:

This icon is your gateway to understanding the data mismatch. Double-click the magnifying glass icon associated with the GT_TAB comparison row.

A detailed comparison window pops up, typically featuring three informative tabs:

- Tab A: Displays the complete data content of the

GT_TABvariable as captured in the first run you selected (Run A, corresponding to theALLvariant in our case). - Tab B: Similarly displays the complete data content of

GT_TABas captured in the second run (Run B, corresponding to theDSvariant). - Diff: This is often the most useful tab. It highlights only the discrepancies between Tab A and Tab B. It will clearly mark rows that exist only in Run A, rows that

exist only in Run B, or rows with differing field values. In our specific comparison of

ALLvs.DSvariants, the "Diff" tab should clearly show the user records that are present in theALLvariant's run but were excluded from theDSvariant's run.

A Note on Test Data Stability: This example also highlights a crucial aspect of effective regression testing and configuration verification: the stability of your test data environment. If your reference runs capture data that is subject to unrelated changes (like the creation of new users in a development system, or master data changes impacting a configured process), comparisons might frequently show "Not equal" simply because this underlying master data has drifted since the reference run was created. This isn't necessarily a failure of your program code or configuration. Therefore, when designing your test variants and creating reference runs, strive to use selection criteria or data snapshots that are stable or where changes are well understood, allowing you to more clearly isolate differences caused by actual code modifications or intended configuration outcomes.

When results are "Not equal," it's important to analyze whether this is due to an intended code change, a bug, an expected outcome from a configuration change, or an external data factor.

5.5 Handling Differences and Leveraging Data Mapping

Let's walk through how RTE assists in verifying code changes or the impact of configuration adjustments, including situations where the structure of the data itself is modified, necessitating RTE's data mapping capabilities.

Scenario 1: Verifying a Targeted Functional Change

- The Requirement: A new business rule dictates that for the specific administrative user

SAP*, the 'CLASS' field in our report's output (GT_TAB) should always display the literal value 'SAP*', overriding whatever value might be stored in theUSR02table. This change should only affect theSAP*user. - Preparation: To make testing this specific requirement easier, let's first create another variant for our test program. Name it

NOT_SAPand configure its selection criteria to include all users exceptSAP*. Once created, execute the program with thisNOT_SAPvariant and save it as a reference run. This gives us a baseline that explicitly excludes the user affected by our planned change. - Implementing the Code Change: Modify the source code of

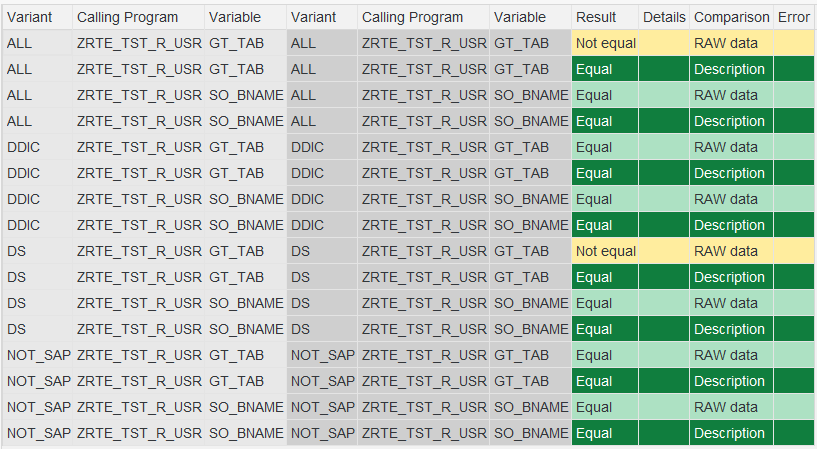

ZRTE_TST_R_USRto implement the hardcoding logic forSAP*'s CLASS field (by uncommenting the code block between tags<2>and</2>in the provided sample program). - Performing the Regression Test: Go to "Compare runs". Select the "Reference program all variants" mode for

ZRTE_TST_R_USR.

-

Analysing the Expected Result: The comparison grid should now clearly demonstrate the impact of our change:

- For variants

ALLandDS(which both includeSAP*), theResultfor theGT_TABvariable should be "Not equal". - Crucially, for variants

DDICand our newly addedNOT_SAP(neither of which includesSAP*), theResultforGT_TABshould remain "Equal". - This outcome precisely validates our requirement: the change correctly affected only the intended user, and the program's behavior for other user sets remained unchanged, confirming successful regression testing for this modification.

- For variants

Scenario 2: Dealing with Structural Changes

- The Requirement: A more significant change is requested: we need to add a new column, displaying the user type (

USTYPfield fromUSR02), to our main output tableGT_TAB. - Implementing the Code Change: Adjust the program logic to include this new field within the structure and data retrieval for

GT_TAB(e.g., by uncommenting the code between tags<3>and</3>and commenting out the original SELECT statement between tags<4>and</4>in the sample program). - Performing the Test: Use the "Reference program all variants" mode with the

iDatacomparison enabled (see Section 5.4 on how to selectiDatain "Comparisons" under "Advanced options"). If only RAW Data or Description comparisons are active, you would see the

change. However, enabling iData without mapping for a structural change will lead to an error.

If only RAW Data or Description comparisons are active, you would see the

change. However, enabling iData without mapping for a structural change will lead to an error.

- Observing the Initial Result: This time, the comparison grid presents a different challenge. For the

GT_TABvariable, across all variants, you will encounterResult = Error. The correspondingErrorcolumn message will read "Different structures - could not compare data". This happens because the fundamental structure ofGT_TABin the reference runs (withoutUSTYP) is inherently incompatible with the structure ofGT_TABin the newly executed runs (which now includesUSTYP). RTE'siDatacomparison, by default, cannot compare tables with different field layouts.

iData Parameters and Mapping

This is where RTE's mapping features become indispensable. They allow you to define rules that tell RTE how to reconcile structural differences before performing the data comparison.

The "iData parameters" button becomes available under the "Advanced options" section when iData comparison is active. Clicking this button is your entry point into the

mapping configuration.

- Upon clicking "iData parameters", RTE needs to understand the current structure of the variables being exported by the modified program. To achieve this, it automatically re-executes the program for the relevant variants (those selected in 'Reference program/variant' or 'Reference program all variants' modes). This allows it to fetch the latest structural definitions. Be aware that this re-execution step might introduce a noticeable delay before the "iData parameters" window actually appears, especially if the program or variants process large amounts of data. Patience is required here.

-

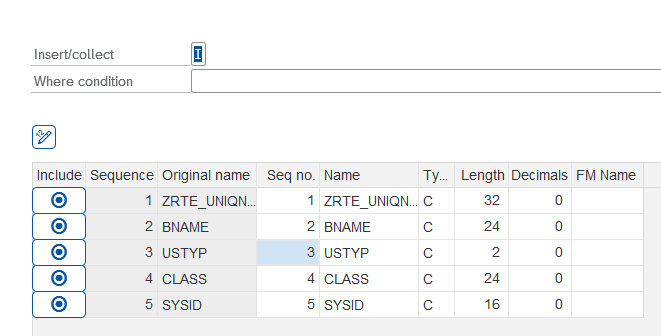

Understanding the "iData parameters" Screen: This screen lists the variable pairs that RTE intends to compare using

iData.-

IncludeColumn: A simple checkbox. Unchecking this will completely exclude the corresponding variable pair (e.g., "SO_BNAME" if we're not interested in it) from the subsequentiDatacomparison. -

Prog/Variable A: Identifies the program and variable name originating from the reference run side (Run A). -

Transform. A: An icon button. Clicking this opens the detailed transformation/mapping editor for the variable(s) from side A. -

Prog/Variable B: Identifies the program and variable name originating from the newly executed run side (Run B). -

Transform. B: An icon button, opening the transformation/mapping editor for the variable(s) from side B.

GT_TAB) has the exact same structure across multiple reference variants being compared in "Reference program all variants" mode, it will be listed only once on this screen, simplifying the mapping process. (See section 5.5.1 for detailed explanation). -

Applying Mapping

Our immediate goal is to compare the data of the original columns, effectively telling RTE to ignore the newly added USTYP column for the purpose of this comparison. This

allows us to verify if the data within the pre-existing fields was unintentionally altered by our structural change.

- On the "iData parameters" screen, locate the row representing the

ZRTE_TST_R_USR GT_TABcomparison. - Since the structural change (the added

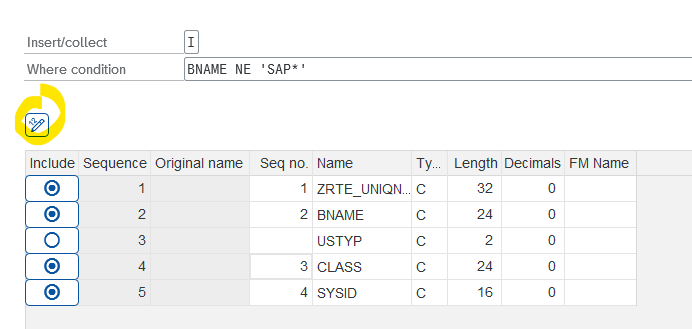

USTYPcolumn) occurred in the new execution (Side B), we need to modify its transformation rules. Click the mapping icon button located in theTransform. Bcolumn for theGT_TABrow. - This action opens the detailed "Transformation" pop-up window for the

GT_TABvariable from the new run.

-

Transformation Options: This pop-up is the heart of data mapping in RTE. Let's examine its components thoroughly:

-

Insert/CollectSwitch: Allows you to choose the processing logic.Insert(default) treats each row individually.Collectapplies ABAP's COLLECT statement logic, which aggregates rows based on non-numeric key fields – useful for specific aggregation scenarios before comparison. -

WHERE conditionField: A powerful filtering mechanism. Here, you can enter conditions using standard ABAPWHEREclause syntax (but without typing theWHEREkeyword itself). For example,BNAME NE 'SAP*'orSTATUS EQ 'A' AND VALUE GT 100. RTE performs a syntax check on the condition entered. -

The Field Mapping Grid: This grid lists all fields present in the variable's current structure (in this case,

GT_TABfrom the new run, includingUSTYP). Each row allows detailed control:-

IncludeCheckbox: The primary control for including or excluding a specific field from the comparison. If unchecked, the field is completely ignored. -

Sequence/Original nameColumns: These are display-only, showing the field's original position and technical name in the source structure for reference. -

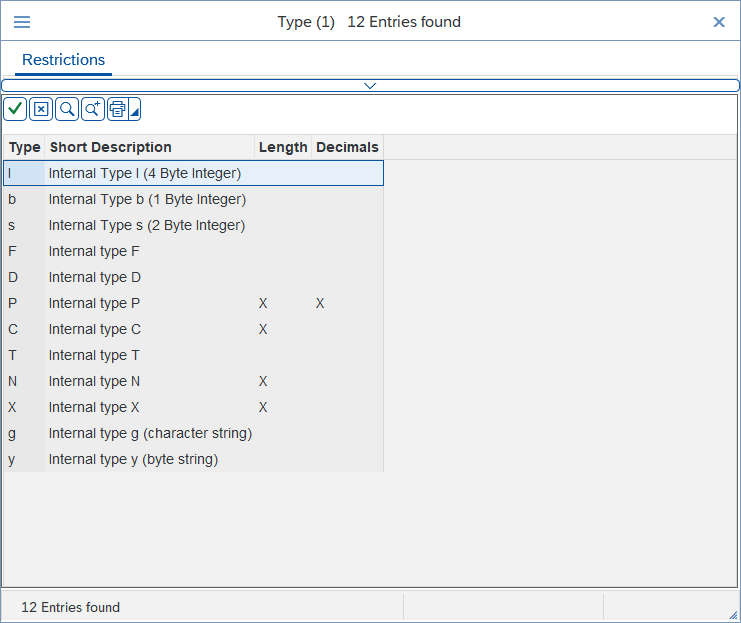

Seq no.Column: Editable. This numeric field dictates the column order in the final, mapped structure that will be used for the comparison. You can change these numbers to reorder columns. -

NameColumn: Editable. Defines the field name in the mapped structure. This allows you to rename fields if necessary for the comparison (e.g., if comparingMATNRfrom one table toMATERIALin another). -

Type,Length,DecimalsColumns: Editable. These allow you to change the data type ('C', 'N', 'P', 'D', 'T', 'STRING', etc.) and corresponding size attributes of a field specifically for the comparison. This is crucial when comparing fields that store similar data but have slightly different technical definitions. Adhere to standard ABAP type definitions. The search help (F4) on theTypefield provides guidance on valid types and whether Length/Decimals are applicable. RTE validates these settings and will report errors if inconsistent (e.g., trying to specify a length for a STRING type:For Type g LENGTH must be equal 0, and DECIMALS must be equal 0).

-

FM NameColumn: Editable. For advanced scenarios, you can specify the name of a standard SAP conversion exit function module (e.g.,CONVERSION_EXIT_ALPHA_INPUT) to transform field values before comparison.

-

-

Renumerate Button(Pencil Icon): A utility button. After including/excluding fields, clicking this button automatically re-calculates and assigns sequential numbers to theSeq no.column for all included fields, ensuring a clean sequence. -

OK (Checkmark) / Cancel (X)Buttons: Located at the bottom (OK) or top (Cancel). Use OK to save the mapping changes you've made within this transformation pop-up. Use Cancel to discard them (you will be asked to confirm "Exit without saving?").

-

- Applying the Mapping: In our scenario, scroll through the field grid within the "Transformation" pop-up for

Transform. Buntil you find the row representing the newly added field,USTYP. Uncheck theIncludecheckbox for this specific row. - Optionally, click the Renumerate button (pencil icon) to update the

Seq no.column for the remaining included fields. - Click the OK (Checkmark) button to confirm and close the "Transformation" pop-up, saving the rule to exclude

USTYPfrom side B. - You are now back on the main "iData parameters" screen. Click its OK (Checkmark) button to apply all defined parameter settings.

- Finally, re-execute the comparison from the main "Compare runs" screen.

Interpreting the Result: The comparison for GT_TAB should now proceed without the "Different structures" error. We successfully used mapping to isolate and ignore

the structural change, allowing us to focus the comparison on the stability of the original data fields.

Scenario 3: Filtering

Let's revisit Scenario 1 (hardcoding SAP* class) where variants ALL and DS showed Result = Not equal. While this correctly identified the change,

perhaps our test goal is to confirm that apart from the intentional change to SAP*, no other users were affected. We can achieve this using mapping filters.

- Go back to the "Compare runs" screen, ensure "Reference program all variants" and

iDatacomparison are selected. - Click on "iData parameters".

- Locate the row for

ZRTE_TST_R_USR GT_TAB. We need to apply a filter to both sides of the comparison to exclude theSAP*user record before the data is compared. - Click the

Transform. Aicon button forGT_TAB. In the "Transformation" pop-up, find theWhere conditioninput field and type the condition:BNAME NE 'SAP*'. Click OK. - Click the

Transform. Bicon button forGT_TAB. In its "Transformation" pop-up, enter the exact sameWhere condition:BNAME NE 'SAP*'. Click OK. - As an optional step, if we are completely uninterested in the

SO_BNAMEvariable for this specific test run, we can uncheck the mainIncludecheckbox for theZRTE_TST_R_USR SO_BNAMErow on the "iData parameters" screen itself. This will skip its comparison entirely. - Click OK on the "iData parameters" screen to apply these rules.

- Re-execute the comparison.

Analysing the Refined Result: Now, when you examine the results grid, the Result for GT_TAB should show "Equal" even for

the ALL and DS variants. Why? Because the mapping rules we defined instructed RTE to filter out the SAP* user row

from both the reference data and the new run data before performing the comparison. This demonstrates how mapping can be used to focus the comparison and ignore known or

intentional differences, allowing you to verify the stability of the remaining data set.

5.5.1 Multiple Variable Entries

As you continue to develop your program and update your reference runs, you might encounter situations where the "iData parameters" screen lists the same logical

variable (GT_TAB) multiple times. This occurs when the underlying data structure of that variable has changed over time, and different reference runs (perhaps for different

variants, or older vs. newer references for the same variant) capture these different structural versions.

Let's illustrate with an example:

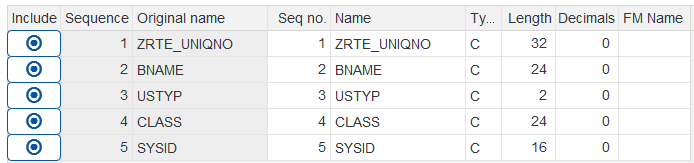

- Initial State: Suppose you have existing reference runs for several variants (

ALL,DS) ofZRTE_TST_R_USR, whereGT_TABhas an old structure (without theUSTYPfield). - Structural Code Change: You modify

ZRTE_TST_R_USRto add theUSTYPfield toGT_TAB. - Create a New Reference Run: Now, you create a new reference run specifically for the

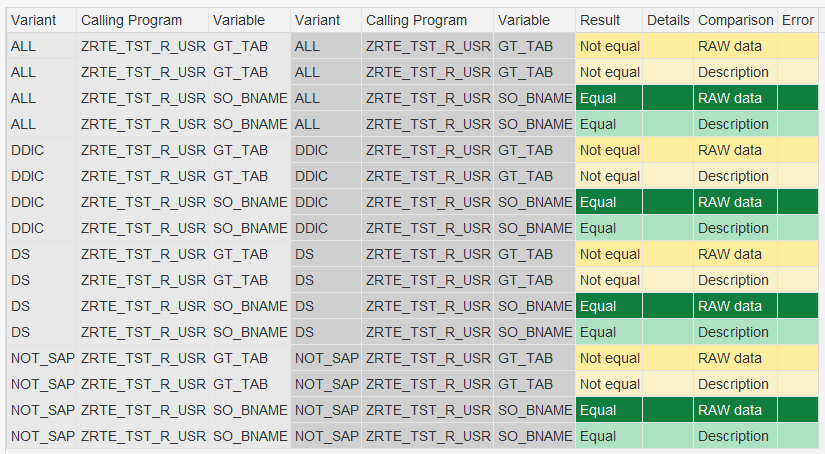

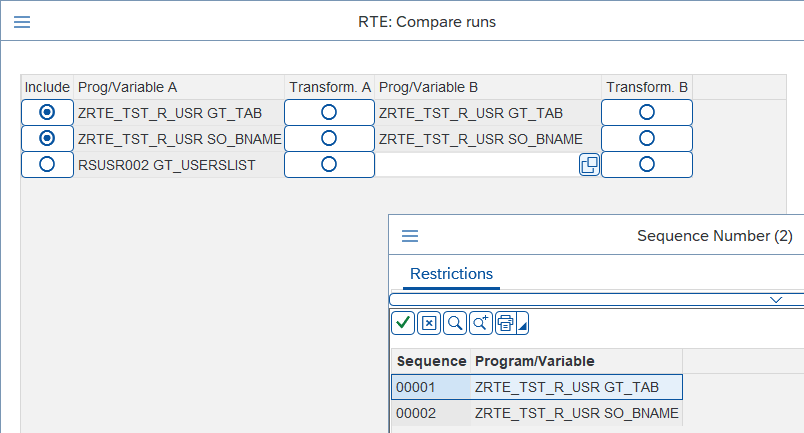

ALLvariant. This new reference run forALLwill captureGT_TABwith its new structure (includingUSTYP). However, the reference run for theDSvariant (if not updated) might still be based on the old structure ofGT_TAB. - Observation in "iData parameters": If you now go to "Compare runs", select "Reference program all variants" (which would include both

ALLandDS), and then click the "iData parameters" button, you should see something like :

Notice that GT_TAB (from ZRTE_TST_R_USR) appears on multiple lines on both the "Prog/Variable A" (reference) side and the "Prog/Variable B" (current run) side.

Explanation of Multiple Entries:

RTE lists a variable in a new row on the "iData parameters" screen if its underlying data structure is different from other instances of that same logical variable being considered in the current comparison batch.

- Line 1 (representing variant

DS): ShowsZRTE_TST_R_USR GT_TAB(from the old reference run ofDS, with the old structure) being prepared for comparison againstZRTE_TST_R_USR GT_TAB(from the current execution of the program forDS, which has the new structure). This line would likely require mapping inTransform. AorTransform. Bto reconcile the structural differences. -

Line 2 (representing variant

ALL): ShowsZRTE_TST_R_USR GT_TAB(from the new reference run ofALL, which has the new structure) being prepared for comparison againstZRTE_TST_R_USR GT_TAB(from the current execution forALL, also with the new structure).- In this specific case (Line 2), because the structure of the reference run (A) now matches the structure of the current run (B), no specific field mapping might be required for this particular pair. The structures are already aligned.

Key Takeaway

RTE does not automatically merge for the same logical variable if their underlying structures differ across the runs being set up for comparison. Each unique structural version of a variable will get its own line in the "iData parameters" list, allowing you to define specific mapping rules tailored to how that particular structural version should be compared against its counterpart. This ensures precise control over the comparison process, even as your programs and their data structures evolve.

5.6 Cross-Check

One of RTE's most advanced capabilities is the "Cross-Check" feature. This allows you to validate your custom program's output not just against its own previous versions, but against the output of an entirely different program, typically a trusted SAP standard report or a well-established custom tool that performs a similar function. This is invaluable for ensuring your custom development aligns with standard SAP logic or established business processes.

Setting up a Cross-Check:

-

Instrument the Trusted Program: First, you need to ensure the program you want to compare against (the 'reference' program, standard report

RSUSR002) also exports the relevant data via RTE. This requires adding aZCL_RTE=>EXPORT_DATAcall at an appropriate point, potentially using SAP's Enhancement Framework if modifying standard code.-

Example: As shown in the code below, you might create an enhancement implementation at the end of function

module

SUSR_USERS_LIST_ALV(used byRSUSR002) to export its final user list:ENHANCEMENT 1 Z_RTE_EXPORT. "active version ZCL_RTE=>EXPORT_DATA( iv_var_name = 'GT_USERSLIST' i_data = gt_userslist ). ENDENHANCEMENT.

-

Example: As shown in the code below, you might create an enhancement implementation at the end of function

module

-

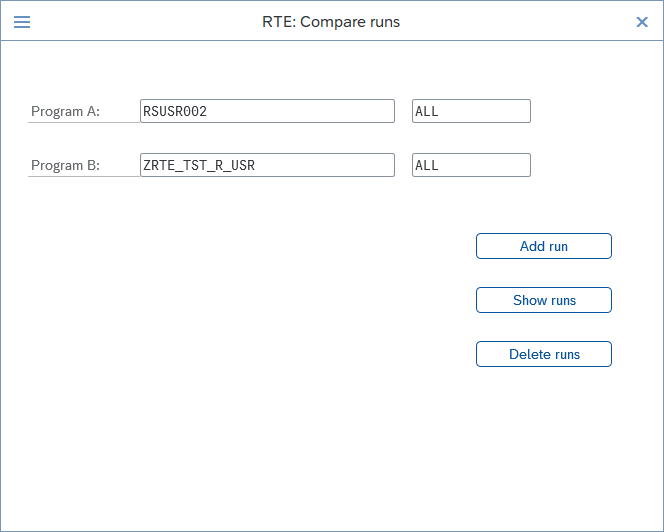

Configure the Cross-Check:

- Navigate to the "Compare runs" screen. Check the "Advanced options" checkbox.

- Click the "Cross check" button.

- A configuration pop-up appears specifically for defining cross-program comparisons.

- In the

Program Arow, enter the name and variant of the program you want to use as the reference point (Program:RSUSR002, Variant:ALL). - In the

Program Brow, enter the name and variant of your program under test (Program:ZRTE_TST_R_USR, Variant:ALL). This often defaults based on what you entered on the main comparison screen. - Click the "Add run" button. This schedules this specific cross-program comparison pair to be executed. You can add multiple pairs if needed.

- The "Show runs" button lets you review the list of scheduled cross-checks. The "Delete runs" button clears the entire list if you need to start over.

- Close the "Cross check" window using the standard close icon (X).

Mapping: The Essential Ingredient for Cross-Checks

Meaningful comparison between two different programs almost always requires data mapping, as it's highly unlikely that the exported variable names, field names, data types, and field order will coincidentally match perfectly.

- After configuring the cross-check and closing its window, click the "iData parameters" button (ensure

iDatacomparison is selected in "Comparisons").

- The "iData parameters" screen will now list the cross-check pair you scheduled. You'll see the variable exported from Program A (

RSUSR002 GT_USERSLIST). - However, the

Prog/Variable Bcolumn for this row will likely be empty initially. RTE cannot automatically guess which variable from Program B should be compared to the variable from Program A. Click the search help icon located within the emptyProg/Variable Bfield. - RTE will present a list of variables exported by Program B (

ZRTE_TST_R_USRin our case, showingGT_TABandSO_BNAME). Select the variable that logically corresponds to the data from Program A (selectGT_TAB).

- The "iData parameters" screen will now list the cross-check pair you scheduled. You'll see the variable exported from Program A (

-

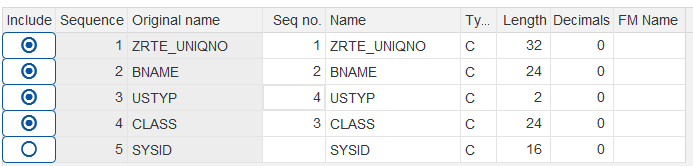

Define Transformations: Now you must define mapping rules using the

Transform. AandTransform. Bbuttons to reconcile the differences betweenRSUSR002 GT_USERSLISTandZRTE_TST_R_USR GT_TAB.- Analyze and Map

Transform. A(RSUSR002 GT_USERSLIST): Open its transformation window. Identify the key fields that have equivalents inGT_TAB(BNAME,USTYP,CLASS). Exclude all other fields fromGT_USERSLISTthat are not relevant for this comparison by unchecking theirIncludeboxes. Use the renumber button to finalize the sequence for side A.

-

Analyze and Map

Transform. B(ZRTE_TST_R_USR GT_TAB): Open its transformation window.

- Field Matching: Ensure only the fields corresponding to those kept in Transform A are included. Exclude any extra fields unique

to

GT_TAB(likeSYSID). - Order Alignment: Adjust the

Seq no.values in Transform B so that the order of included fields exactly matches the sequence defined in Transform A (e.g., if A is BNAME, USTYP, CLASS, then B must also be BNAME, USTYP, CLASS in that order). Alternatively, you could adjust A to match B's original order – the key is that the final mapped order is identical on both sides. -

Type and Length Harmonization: Carefully check the

Type,Length, andDecimalsof the corresponding fields between A and B. If they differ (e.g.,USTYPis C(2) in one and C(4) in the other), you must adjust one side in the mapping to match the other.Critical Best Practice: To avoid potential data loss during comparison due to truncation, always modify the field with the shorter length to match the longer length. In the C(2) vs. C(4) example, you should change the C(2) field's mapping definition to C(4), rather than shortening the C(4) field to C(2).

- Field Matching: Ensure only the fields corresponding to those kept in Transform A are included. Exclude any extra fields unique

to

- Analyze and Map

- Once both transformations are defined, click OK on the transformation windows and the main "iData parameters" window.

-

Execute the comparison.

- Interpreting Results: Examine the

Resultcolumn for the cross-check row. If functional differences exist between the two programs (like our intentional hardcoding ofSAP*'s class inZRTE_TST_R_USR, which differs fromRSUSR002's standard output), you will see "Not equal". Use theDetailsmagnifying glass and the "Diff" tab to pinpoint these functional or configuration-driven discrepancies.

- Refining Comparison: If certain differences are known and accepted (e.g., results of an configuration change expected to differ from the baseline program),

you can further refine the comparison by adding appropriate

WHEREconditions to both Transform A and Transform B mappings (e.g., addingBNAME NE 'SAP*'to both sides to exclude that specific user from the comparison if it's not relevant to the current check). Remember to save your refined setup as a selection screen variant if you intend to reuse it (see Chapter 5.8 for details).

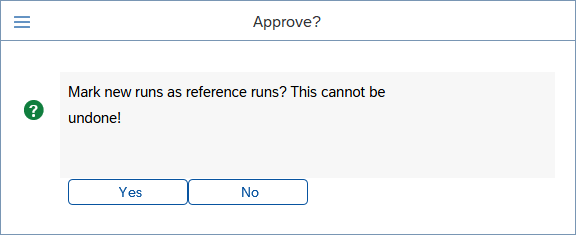

5.7 Approving New Reference Runs

Development and configuration changes are iterative. After implementing changes (code or configuration), performing comparisons, meticulously analyzing differences using mapping, and ultimately confirming that the program's current behavior is indeed the new correct baseline, your original reference runs might become obsolete. The mappings required to compare against them might become complex and counter-productive for future tests.

RTE provides a streamlined way to update your baseline. Instead of manually re-creating reference runs one by one using the "Run program" transaction, you can approve the runs that were just generated during the comparison process itself, promoting them to become the new official reference runs.

- Verification is Key: Before proceeding, be absolutely certain that the results shown in your current comparison grid accurately represent the desired, correct state of the program following your latest changes.

-

Initiate Approval: In the ALV toolbar displaying the comparison results, locate and click the "Approve" button (depicted with a checkmark).

- Final Confirmation: RTE understands the significance of this action and will present a confirmation pop-up dialog box. It explicitly warns you that clicking "Yes" will

designate the runs just executed during the comparison as the new reference runs and, crucially, that this action cannot be undone.

- Commitment: Only if you are completely confident that the current state is the correct new baseline should you click "Yes".

Consequences of Approval: When you approve, RTE updates its internal records. For each program/variant combination included in the comparison, the run generated during that comparison execution replaces the previous run that was marked as the reference.

Strong Recommendation: Making approval a regular part of your workflow after verifying code or configuration changes is highly recommended, especially following any

modifications that alter the structure (fields, types, order) of variables exported via EXPORT_DATA, or after confirming a configuration change has the desired, stable

outcome. Approving the new state as the reference significantly simplifies subsequent regression tests or verifications. Future comparisons against this updated reference will start from

a structurally identical baseline, reducing or entirely eliminating the need for potentially complex data mappings that were required to bridge the gap with the older, now-obsolete reference run.

This keeps your testing process efficient and focused on detecting new, unintended changes.

5.8 Preserving Your Mapping

Configuring intricate mapping rules, especially for complex structural changes or cross-program comparisons, can involve considerable effort. Repeating this setup for subsequent test cycles would be inefficient. RTE provides a standard way to save your entire comparison configuration, including all mapping details, for future use:

- Save Selection Screen Variant: After you have defined your comparison mode, selected programs/runs, and configured all necessary "Advanced options" (including

enabling

iData, setting up "Comparisons", defining "Cross checks", and meticulously crafting transformations within "iData parameters"), use the standard SAP functionality to save these settings as a selection screen variant for the "Compare runs" screen by clicking the standard Save Variant icon on the toolbar. -

What's Included: Saving a selection screen variant captures the entire state of the "Compare runs" input screen at that moment. This includes:

- The selected comparison mode (Reference program/variant, All variants, 2 runs).

- The specified Program names and/or Run IDs.

- The state of the "Advanced options" checkbox.

- The selections made within the "Comparisons" pop-up (e.g., which comparison types like

iDataare active). - All defined "Cross check" pairs.

-